Perhaps the first seeds of human mistrust of robots were planted a century ago, when Czech playwright Karel Capeck coined the word “robot” in his play “R.U.R.” The play ends with a revolt, with the robots storming a factory and killing all the humans. Since then, the notion that some kind of artificial intelligence may supplant humankind as the dominant intelligent species on Earth has emerged as a common theme in science fiction, including films such as “Terminator” and “The Matrix.”

Another science fiction theme is sentient robots feeling mistreated by humans, as in “Blade Runner” or “Westworld.” In a galaxy far, far away, “Star Wars” characters R2-D2 and C-3PO may just be droids, but in our world, they might be considered slaves. In fact, “robot” comes from the Czech word for slave.

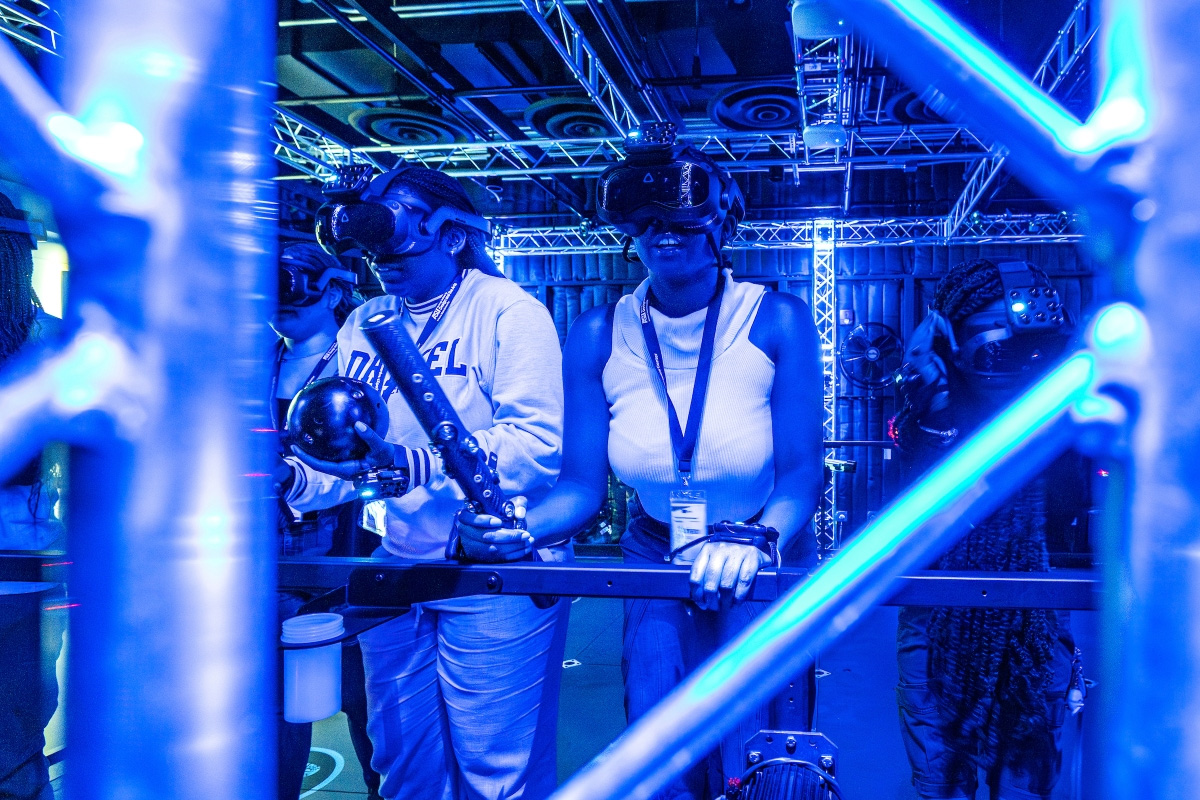

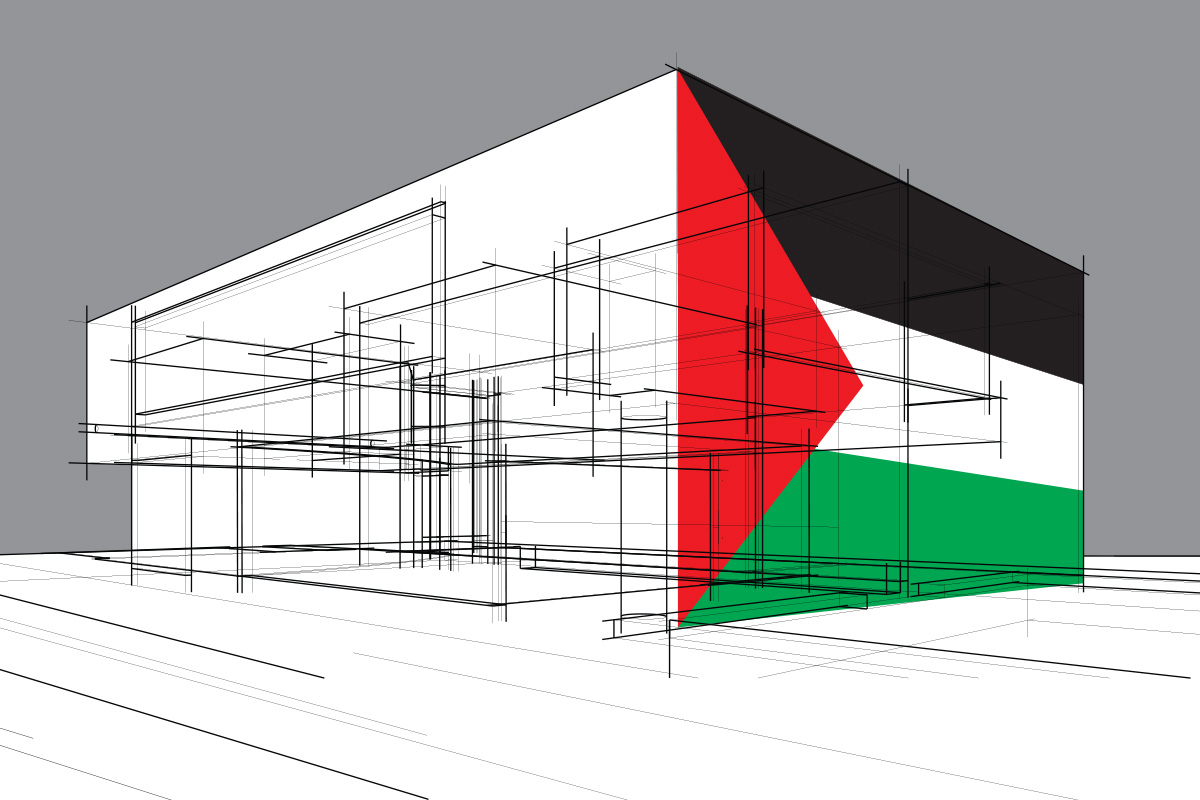

These themes raise an important question. Once we’re on the brink of developing true artificial intelligence, do we program them as equals or as a means to an end, only existing to do our will? GHOST Lab invites visitors to ponder this and other ethical issues.

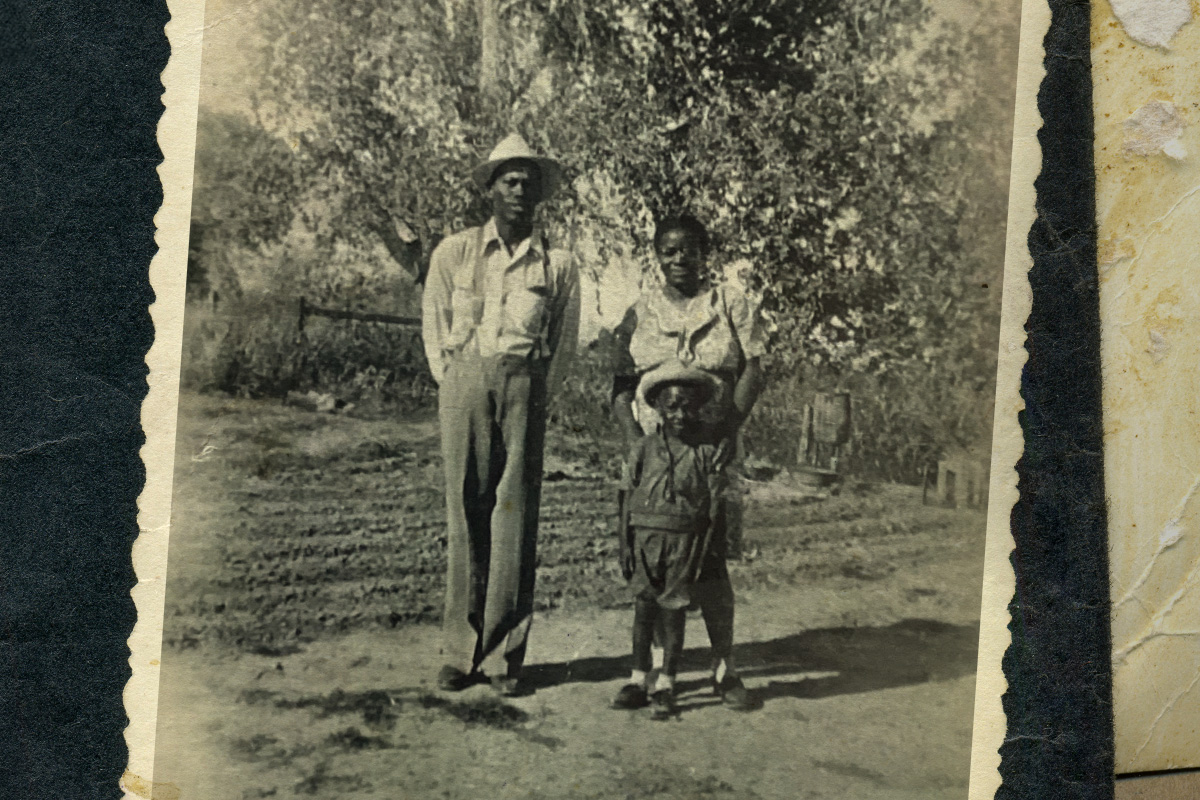

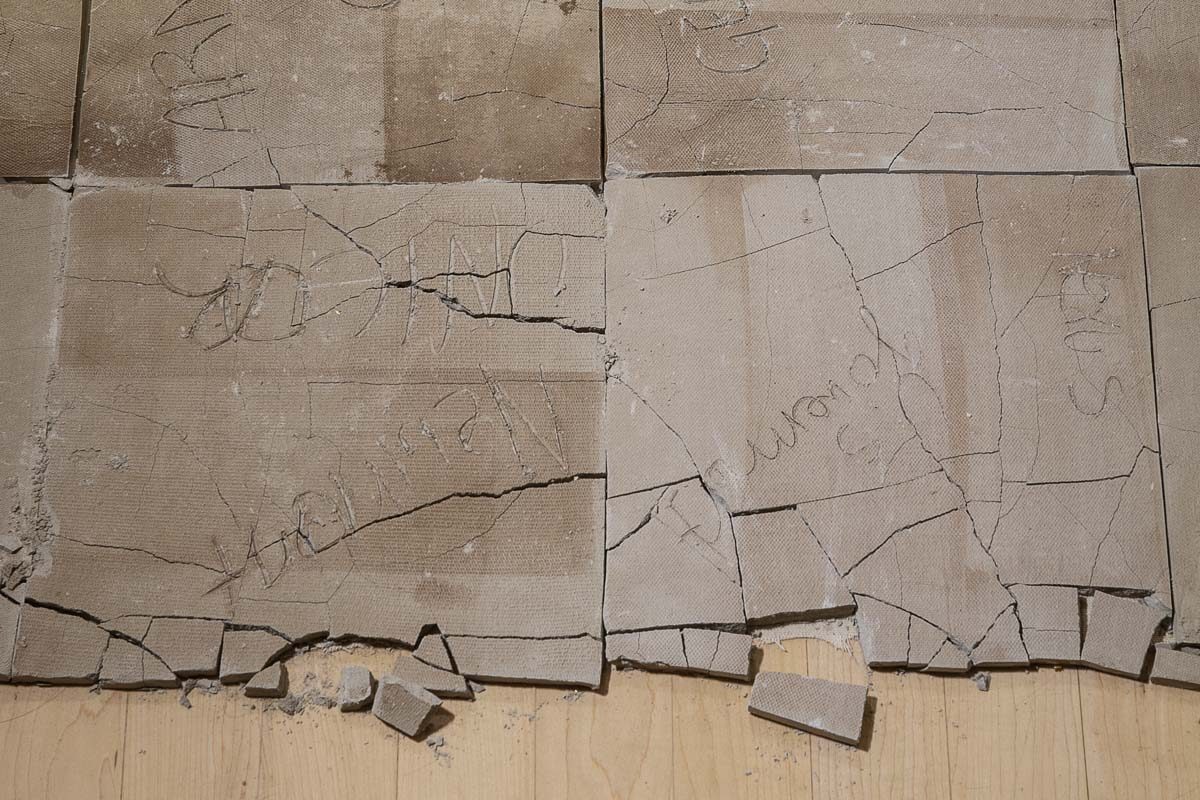

Gharavi incorporated a slavery theme in the art installation, with cryptic words on slate gray walls from historic American slave laws and Biblical references on how to treat slaves. The theme echoes through the sound installation, artfully blending recordings from a Mars rover expedition and slave songs of survival, created by Max Bernstein, an ASU clinical assistant professor jointly appointed in the The Sidney Poitier New American Film School and the School of Music, Dance and Theatre.

“When we think about robots and AI, we might imagine a future that is a dystopia, with robots and AI presenting a threat. Or we might imagine it producing a new kind of utopia where we don’t have to work anymore because we have robots taking care of us. Our feelings about robots and artificial intelligence tend to be kind of ambivalent,” Gharavi says.

“Social encounters with robots and AI are going to be ubiquitous features in our lives in the future,” he adds. “What do we want those interactions to feel like? We need to shape that future thoughtfully and carefully. We need to design and rehearse for it. Artists need to be part of these processes because we need that imagination and creativity.

“We wanted to create a space where people could meditate and reflect on the past, the future and the present, and ways in which all these things are haunted by our histories, by our stories, by our fantasies and by our nightmares.”

As we contemplate the future of space exploration, we may be reminded of that famous line from “Star Trek,” “to boldly go where no one has gone before.” Today it is clear that when it comes to new frontiers beyond the Earth, our AI and robot partners will be boldly going by our sides.

The Global Security Initiative is partially supported by Arizona’s Technology and Research Initiative Fund. TRIF investment has enabled hands-on training for tens of thousands of students across Arizona’s universities, thousands of scientific discoveries and patented technologies, and hundreds of new start-up companies. Publicly supported through voter approval, TRIF is an essential resource for growing Arizona’s economy and providing opportunities for Arizona residents to work, learn and thrive.

A version of this story originally appeared in ASU News.

Photos by Andy DeLisle.